PyTorch Introduction

To Install PyTorch in Linux (Ubuntu), here is the step:

$ sudo apt install python3-pip python3 python3-dev

$ pip3 install torch torchvision torchaudio notebook

MNIST Dataset (0 to 9 handwritten characters) as given below

|

| MNIST Dataset |

Given the dataset of MNIST, do the accuracy analysis of the dataset based on the following hyperparameters using Deep Learning with PyTorch

1. Number of epochs is 4,5,6 and 7

2. batch_size is 64 and 128

3. Number of Hidden layers is 1 and 2

4. Learning rate is 0.001, 0.002 and 0.003

Compute the accuracy in each case.

Run the following code either using the Jupyter Notebook or Google Colab.

To run the notebook, the command is

$ python3 -m notebook

import torch

import torch.nn as nn

import torch.optim as optim

from torch.utils.data import DataLoader, TensorDataset

from torchvision import datasets, transforms

from torch.autograd import Variable

# Define a simple neural network model

class SimpleNN(nn.Module):

def __init__(self, input_size, num_hidden_layers, hidden_size, output_size):

super(SimpleNN, self).__init__()

self.flatten = nn.Flatten()

self.hidden_layers = nn.ModuleList([nn.Linear(input_size if i == 0 else hidden_size, hidden_size) for i in range(num_hidden_layers)])

self.output_layer = nn.Linear(hidden_size, output_size)

self.relu = nn.ReLU()

self.softmax = nn.Softmax(dim=1)

def forward(self, x):

x = self.flatten(x)

for layer in self.hidden_layers:

x = self.relu(layer(x))

x = self.output_layer(x)

x = self.softmax(x)

return x

# Load and preprocess MNIST data using PyTorch

transform = transforms.Compose([transforms.ToTensor(), transforms.Normalize((0.5,),

(0.5,))])

train_dataset = datasets.MNIST('./data', train=True, download=True, transform=transform)

test_dataset = datasets.MNIST('./data', train=False, download=True, transform=transform)

train_loader = DataLoader(train_dataset, batch_size=64, shuffle=True)

test_loader = DataLoader(test_dataset, batch_size=64, shuffle=False)

# Define function to create and train the PyTorch model

def create_and_train_model(num_epochs, batch_size, num_hidden_layers, learning_rate):

input_size = 28 * 28

hidden_size = 128

output_size = 10

model = SimpleNN(input_size, num_hidden_layers, hidden_size, output_size)

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=learning_rate)

for epoch in range(num_epochs):

for data, labels in train_loader:

data, labels = Variable(data), Variable(labels)

optimizer.zero_grad()

outputs = model(data)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

# Evaluate and return the accuracy

correct = 0

total = 0

with torch.no_grad():

for data, labels in test_loader:

data, labels = Variable(data), Variable(labels)

outputs = model(data)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

accuracy = correct / total

return accuracy

# Define hyperparameters

num_epochs_list = [4, 5, 6, 7]

batch_size_list = [64, 128]

num_hidden_layers_list = [1, 2]

learning_rate_list = [0.001, 0.002, 0.003]

# Perform the analysis

for num_epochs in num_epochs_list:

for batch_size in batch_size_list:

for num_hidden_layers in num_hidden_layers_list:

for learning_rate in learning_rate_list:

print(f"Epochs: {num_epochs}, Batch Size: {batch_size}, Hidden Layers: {num_hidden_layers}, Learning Rate: {learning_rate}")

accuracy = create_and_train_model(num_epochs, batch_size, num_hidden_layers,

learning_rate)

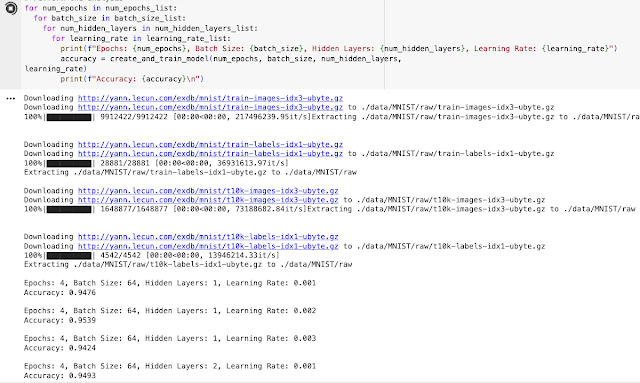

print(f"Accuracy: {accuracy}\n") The following is the output we got from Google Colab

|

| Simple Neural Network using PyTorch |

Comments

Post a Comment